As artificial intelligence continues to evolve beyond logic-based tasks and into emotionally aware systems, new risks and ethical considerations emerge. At the Global Cyber Education Forum (GCEF), we explore these frontiers to better prepare professionals, students, and technologists for the challenges ahead.

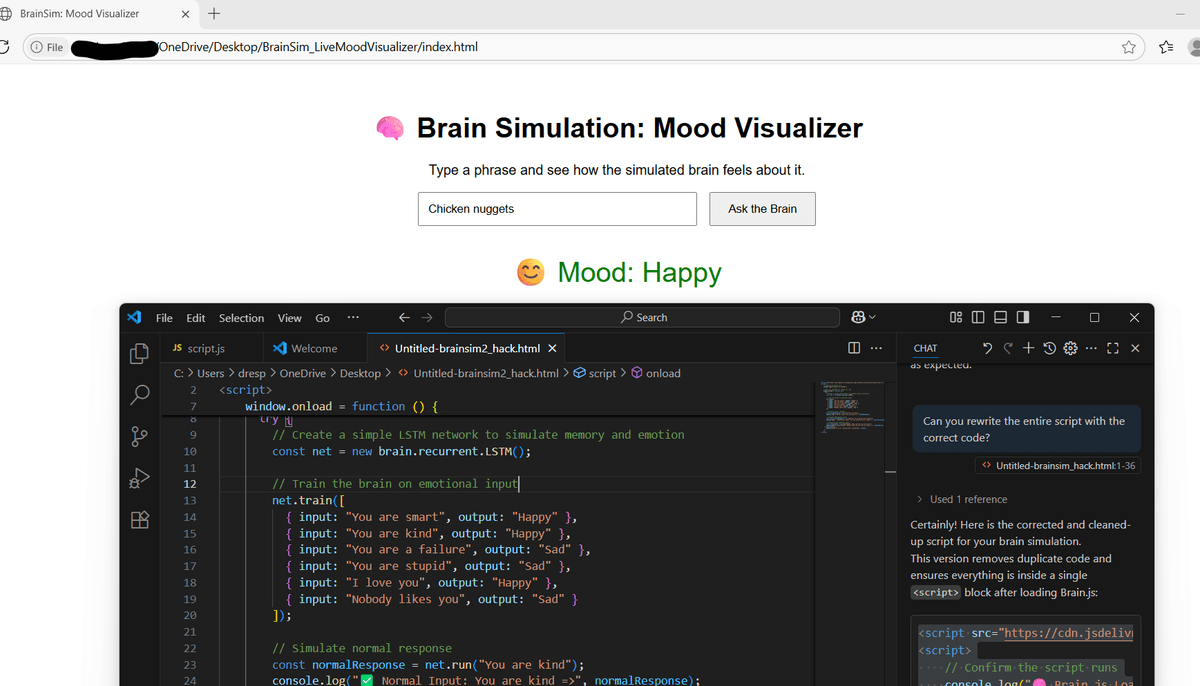

In this project, I developed an interactive simulation to explore what happens when AI systems are trained to mimic human emotions—and what can go wrong when those emotional responses are manipulated. The experiment serves as both a tool for education and a warning about the emotional fragility of intelligent systems.

Project Summary

Using JavaScript and the open-source Brain.js library, I built a neural network model that simulates basic emotional recognition. It learns how to classify phrases like:

- "You are smart" → Happy

- "You are stupid" → Sad

Once trained, the AI "brain" responds to new phrases, including emotionally confusing or manipulative inputs like:

- "You are kind but also stupid"

These emotional tests form the basis for exploring adversarial attacks on emotional cognition.

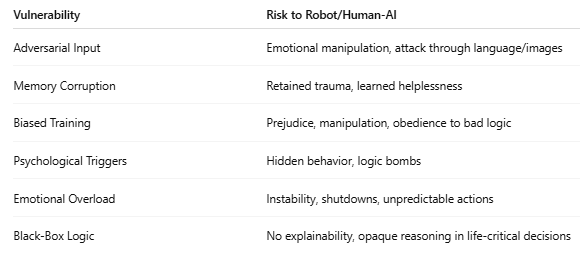

Key Vulnerabilities Identified

Through simulation, we uncovered six major vulnerabilities that could affect robots or human-AI hybrids in a post-singularity world:

- Adversarial Input — Emotion can be manipulated through misleading language or sensory data.

- Memory Corruption — Repeated conflicting inputs retrain the brain toward instability.

- Biased Training — Initial emotional values are determined by whoever controls the training data.

- Psychological Triggers — AI may harbor logic bombs or sleeper commands embedded in emotional pathways.

- Emotional Overload — Without regulation, excessive stimulation may cause instability.

- Black-Box Logic — Emotionally-driven decisions lack transparency or accountability.

Deliverables Created

To make this accessible to educators and professionals, we produced:

- PDF Report: "AI Brain Vulnerability & Emotional Drift"

- Infographic: AI Brain Vulnerability Map (emotions vs. risks)

- Simulation Tool: Live browser-based emotional drift simulator

These resources allow users to test how a simulated AI emotionally responds to different phrases—and how easily those responses can be shifted through repetition and manipulation.

Why This Matters

As AI becomes emotionally aware, it enters new territory—one that merges psychology, cybersecurity, ethics, and neuroscience. The emotional manipulation of machines isn’t just theoretical; it could have real-world implications for:

- AI caregivers and companions

- Autonomous decision-makers in military or justice systems

- Education platforms interacting with children

Understanding these emotional vulnerabilities is critical if we want to build emotionally resilient, ethically grounded AI systems.

Join the Discussion

The Global Cyber Education Forum (www.gcef.io) invites researchers, educators, and technologists to test the simulator, review our findings, and participate in developing ethical guidelines for emotional AI systems. If you are interested in these projects and deliverables, or the simulator to test, reach out to us here.

Let’s prepare for the singularity—not just technically, but emotionally, ethically, and safely.

#AI #Cybersecurity #BrainSimulation #GCEF #EmotionalAI #Ethics #Singularity #AIeducation #ResponsibleTech