Artificial intelligence is expanding inside enterprise environments faster than governance frameworks can mature. AI copilots, third-party AI apps, embedded models, and autonomous agents are no longer experimental tools — they are operational systems touching identity, data, and business workflows.

The challenge for security leaders has been simple:

What AI is actually running inside the environment — and how risky is it?

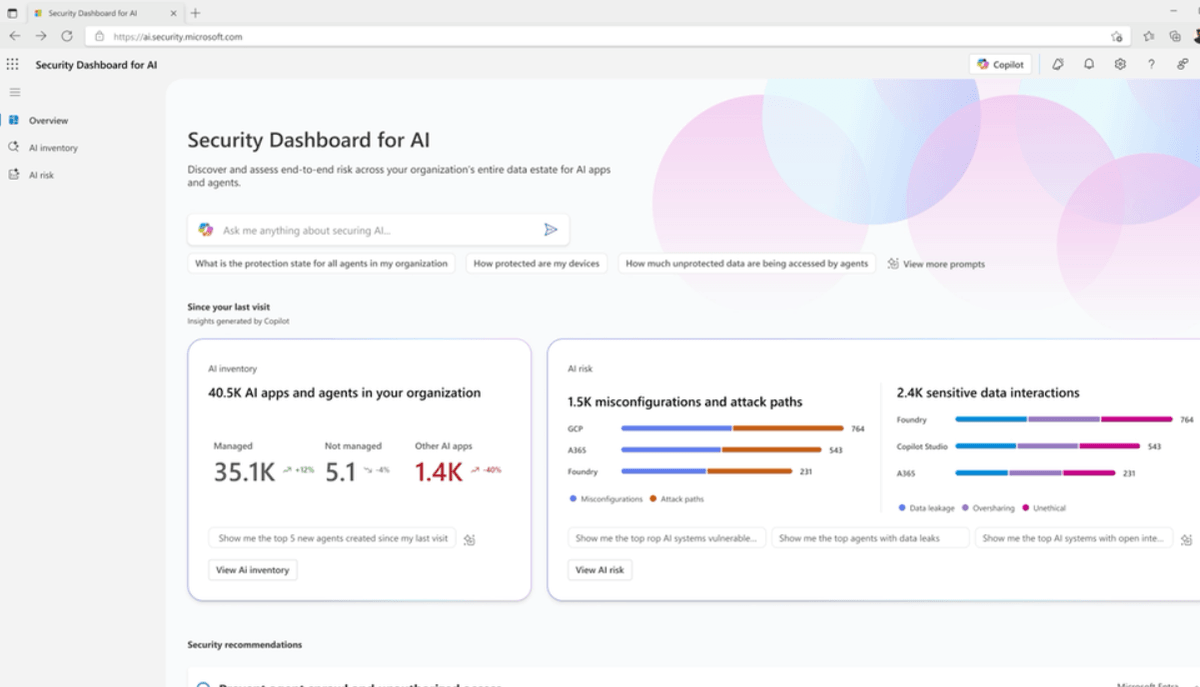

After spending the weekend previewing Microsoft’s new Security Dashboard for AI (currently in public preview), one thing became clear: this product addresses a visibility gap that security teams have quietly been struggling with.

AI Risk Is Now Measurable

The most valuable capability in the dashboard is not just inventory. It is usage-based risk insight.

The dashboard identifies AI applications active in the environment and flags which ones present elevated risk based on actual activity. This is critical.

Historically, AI governance has been policy-driven:

- Acceptable use policies

- Data classification guidelines

- Advisory memos

- Training campaigns

But policy without telemetry creates blind enforcement.

This dashboard shifts AI governance from theory to measurable signals. It surfaces:

- Risky AI apps in use

- Usage trends

- Risk posture summaries

- Recommended best practices for remediation

For the first time, AI becomes an observable attack surface — not just a compliance conversation.

Clear Inventory, Clearer Conversations

One of the strongest aspects of the dashboard is the clarity of its inventory model. AI assets are presented in a way that security practitioners can immediately understand.

Visibility includes:

- AI apps being used internally

- Risk categorization

- Usage-based exposure insights

- Integrated recommendations

The GUI is intuitive, clean, and navigable. This matters. Security tools that require deep operational gymnastics reduce adoption. This dashboard is structured in a way that both analysts and executive leaders can interpret.

More importantly, it links seamlessly into Microsoft Defender for deeper investigation.

That integration is powerful.

Risk surfaced in the AI dashboard can pivot directly into Defender for further analysis. Instead of siloed AI visibility, the dashboard extends into the broader detection and response ecosystem — identity, endpoint, data, and cloud.

That cohesion increases operational efficiency and reduces investigative friction.

Operational Impact for Incident Response

From an Incident Response perspective, this shift is significant.

AI activity now produces usable security signals. That means:

- Risk can be detected before exposure escalates

- Usage can be reviewed in context

- Mitigation decisions can be prioritized

The dashboard aligns well with traditional incident response phases:

Detection: Risky AI applications are identified.

Containment: Integration with Defender supports corrective action.

Eradication: Misconfigurations or excessive permissions can be addressed.

Recovery: Governance posture improves through enforcement and best-practice guidance.

As AI tools gain more autonomy inside enterprise systems, early visibility becomes critical. Security teams cannot defend what they cannot see.

Necessary, Timely, and Practical

This dashboard feels necessary.

AI adoption is happening at business speed. Employees experiment. Teams integrate tools. Productivity gains drive rapid enablement.

But without centralized visibility:

- Shadow AI spreads.

- Data exposure risks increase.

- Governance conversations lack telemetry.

Microsoft’s Security Dashboard for AI helps close that gap by centralizing insight and linking it directly to actionable security workflows.

It does not claim to solve every future AI risk challenge. But it does something foundational:

It treats AI as a security-governed asset class.

That shift alone is meaningful.

Final Take

The Security Dashboard for AI is practical, useful, and well-designed. Its ability to surface risky AI app usage — and link directly into Defender for deeper inspection — transforms AI governance into an operational discipline.

Organizations deploying AI at scale need visibility. This dashboard provides it.

In a world where AI is becoming autonomous, interconnected, and deeply embedded into enterprise systems, measurable oversight is no longer optional.

Visibility is the first control.

And this is a strong step in that direction.